The amount of information gathered through video is staggering, most of it is of a sensitive nature. Security video footage can go back weeks, or even months as businesses stockpile this data to help them better plan and run their businesses. AI analytics operates on this video data and may require a long-range of video data to complete its tasks. While AI can do wondrous things, do most businesses fully understand the amount of data risk that their business is exposed to when working with third-party AI providers? Especially as the data used for such analytics is always sensitive yet not all of it is always required.

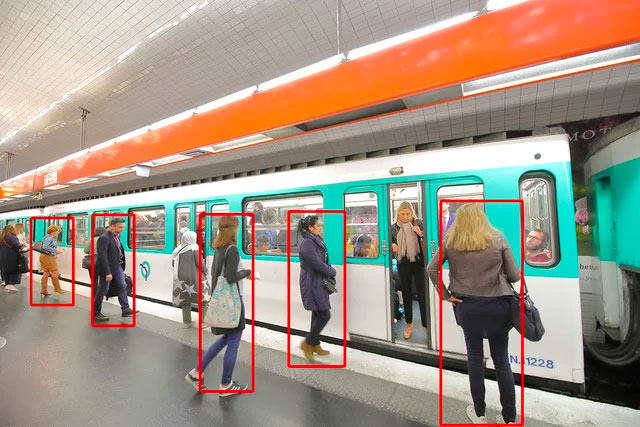

Because there is so much private and confidential data being compiled, businesses need to simultaneously comprehend both the privacy risks of their customers as well as the business risks of the raw footage. For instance, take a transit system recording a particular subway platform. At rush hour, packed with commuters, there are privacy concerns for all the individuals on the platform. But at 3 A.M., even the empty platform still holds a wealth of rich confidential information about the platform and infrastructure. Both represent a risk, and both need to be protected.

You may assume that your AI vendor is protecting your data, but the devil is in the details. How well do you know their actual terms and conditions? Is your business’ data and customer data truly protected? Probably not.

This is because while AI vendors may brag about encryption, they gloss over the fact that the encryption is not end-to-end. Confidential data can be encrypted at rest and in motion, however, when the real work happens during inference, the data must be unencrypted. Unencrypted data opens your business to risk, a detail that is typically glossed over by AI vendors.

How does a business protect both its customers’ privacy and its own confidential data during the AI inference process? Almost all AI vendors rely on the security of the computation devices where inference happens. But it’s been shown over and over again that computation devices can be compromised. Once a third party has control of hardware, they have access to all the data on that server because the inference process demands unencrypted data. Having access to video data during inference is like having access to a secure video feed.

As we have all learned in the realms of digital privacy and digital security, it is not a question of if there will be a compromise, but rather when. Many businesses learn about the compromise in the worst way possible – publicly, from a third party, putting the business in an extreme reaction mode.

If your business relies on video analytics from an AI service provider, it is essential that you understand the answers to these critical questions:

- In any video data, only a portion of the information is directly relevant. How much of the streamed information is not needed and how much risk is incurred in handling this unneeded data?

- How, exactly, is confidential data being protected during each step in the AI analytics process? Specifically, how are you protecting my customers’ privacy and my business’ confidential data during inference?

- What is your notification process for hardware compromise? Will you be able to tell me how much of my data has been compromised and for how long?

- What is the indemnity in the case of a compromise? How is my business protected?

If you can’t get a direct answer to these important questions, then it is time to talk to Protopia.

Our Confidential Inference redacts the non-essential (yet still private or confidential) data, delivering to the AI application only the data needed for the inference task. As the world’s only provider of data-centric and hardware-agnostic confidential inference, we can help you assess the risks you face and how to better protect both your business and your customers.