Harnessing Value from AI Without the Sensitive Information

The internet has been shaken with news of leaked sensitive images taken by a robot vacuum company’s devices. The device in question is a self-driving robot vacuum designed to video its environment while cleaning. According to experts, this is part of ongoing efforts to train artificial intelligence. Gathering real data from real environments to improve AI is valuable to everyone, but it does not need to result in sensitive information being leaked in this manner.

Although robot vacuum makers maintain that customers’ images are safe, this incident gives rise to several questions. First, are the robot vacuum cleaners (or other devices) in your home taking sensitive photos of you and your loved ones? Second, how much personal data are your smart devices collecting and what are they used for? Finally, is there a way to take value from data without compromising customers’ privacy?

This article answers these questions—and more.

How Did Sensitive Pictures from a Robot Vacuum Leak Online?

Imagine living in your home all by yourself only to find pictures of yourself using the bathroom online. While this may sound like a silly joke, it actually occurred in 2022.

In late 2022, the MIT Technology Review covered how the internet came into possession of 15 private photos of varying types and sensitivities. These images, all taken by a robot vacuum’s development versions, were distributed around various private Facebook groups. Among the most intimate of the shots was that of a young woman in a purple shirt using the toilet. Her face is unobscured in some of the grainy images.

Another picture showed a young boy with his stomach on the floor. From the shot, it’s evident that the boy was looking at the object as it recorded him a little below eye level. Other images showed rooms from various homes across the globe with labels like “ceiling light,” “TV,” etc.

If you’re wondering how this happened, a team of contractors tasked with data labelling for the robot vacuum company inadvertently leaked them.

Do People Truly Know what They’ve Signed Up for?

The leaked images were captured by “special development robots”. The photos were obtained from “paid collectors and employees” who had opted to share videos and other data streams for training purposes. These people signed agreements and were advised to “remove anything they deem sensitive from any space the robot operates in.” That said, whether it’s the employees or us in the situation, how well would we follow fully understand the terms?

The robot vacuums may indeed be different from the ones sold to customers. However, this remains alarming as it reminds us of how customers often unknowingly consent to various degrees of data monitoring written in fine print and vague languages when using smart devices.

The truth is that artificial intelligence is hungry for human data by the day with increased integration into various types of products and services. AI thrives in using large data amounts from customers to facilitate pattern recognition through algorithm training.

The more realistic the data sets are, the more useful they become. As such, AI companies find information from real environments very valuable. Given how grossly invasive these smart devices can be, it raises concerns as to how much more sensitive data they have in their possession.

Another problem is that the paid data labelers are humans viewing those images. “It’s much easier for me to accept a cute little vacuum, moving around my space [than] somebody walking around my house with a camera,” information scientist, Jessica Vitak, says. Eerily, the equivalent is happening with AI companies’ data chain.

With the test device data was combined in big batches – giving more people having access, and creating more avenues for data leaks. During the data annotation process, third-party individuals (like the contractors) must view, categorize and label them to contextualize the datasets and make them useful for AI or machine learning enablement.

Data Security and Privacy are Not the Same

First of all, it’s important to understand that while data security and privacy are intertwined, they aren’t the same thing. While data security refers to protection from unauthorized access, data privacy refers to how transparent the data sharing, collection, and usage process is. According to Mozilla’s “*Privacy Not Included” project’s lead researcher, Jen Caltrider, “security has gotten better, while privacy has gotten way worse…the devices and apps now collect so much more personal information.”

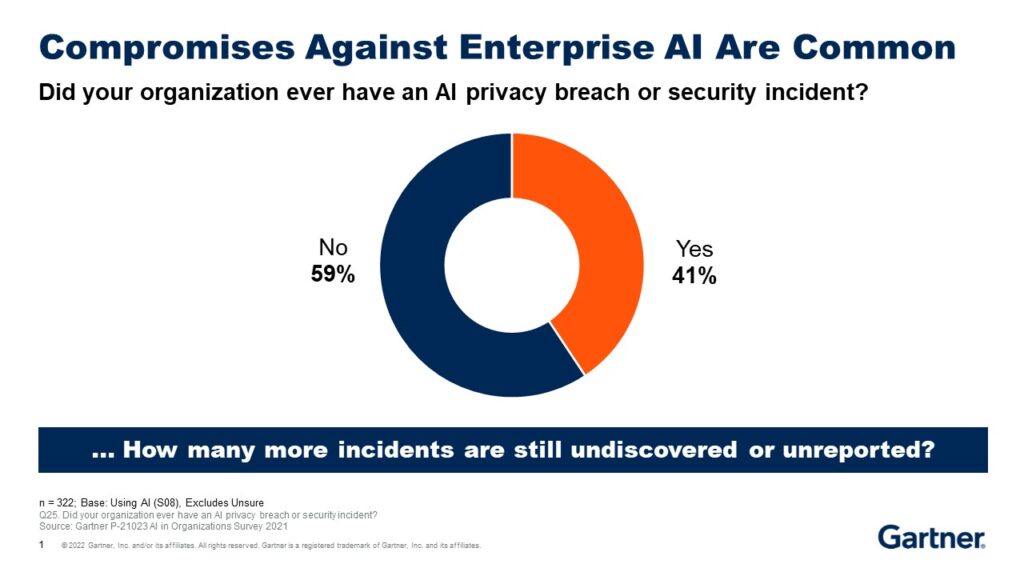

The implications of this breach in data privacy are far-reaching. Like in Ros case, personal data can be shared with persons who have no business accessing them. Gartner found that malicious attacks against enterprise AI can be frequent based on companies surveyed in 2021.

In the case of a breach here, anyone with images of your face can use it to detect you in videos or photos. Third parties with access to your data may also sell them to others for fraudulent and other untoward purposes. For the companies handling these data, data privacy breaches can result in lost public trust and damage to their reputation. Using AI comes with responsibility. In fact, the World Economic Forum champions “Responsible Artificial Intelligence” which is the is the practice of creating AI systems in away that empowers people and businesses, enabling companies to scale and build trust in AI.

What Does a Responsible Solution Look Like?

Is responsible AI possible? Yes, but it will require awareness and education about the challenges around privacy and data access. AI poses new trust, risk, and security management requirements that companies and end-users may not even be aware of. Additionally, equipping parties with the right technology will be imperative. For example, with a solution like Protopia AI, companies can access and share real data without exposing it to unscrupulous elements, while being able to derive insight from sensitive data. Protopia AI’s Stained Glass™ solution transforms data as it comes from, learns what AI models need, removes what it doesn’t, and garbles incoming data – as much as possible with minimal accuracy loss.Additionally, this transformation is performed by the enterprise that has collected the data before that data leaves the data source they have securely collected the data at. This minimizes the number of entities that need to be trusted with handling the original sensitive data.

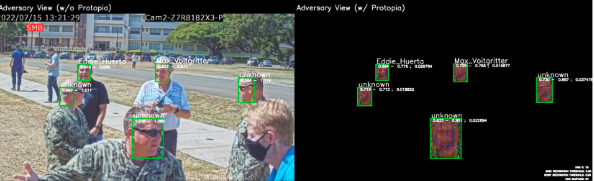

Protopia AI has also seen early success with a product demo with NetApp at July’s U.S. Navy Trident Warrior 2022 program.

Pictured above is an In-field deployment of the Stained Glass Transform at the U.S. Navy Trident Warrior 2022 exercise. Real-time facial recognition is being used to recognize persons of interest, while garbling everything else in the feed. Organizations must differentiate between what is sensitive and what is not, and minimize private information exposure wherever possible.

Gaining More Access and Control with Protopia AI

When it comes to data accessibility and protection – AI solutions will need to balance safety and effectiveness. Fintech leader, Q2 uses Protopia AI’s technology to access potential clients who were previously unapproachable due to internal protocols restricting SaaS solutions. The Q2 Sentinel team is using Protopia AI’s Stained Glass Transform™ to expand the adoption of their latest cloud-based Fraud Detection Solution to those clients that are underserved or unserved today by detecting fraudulent checks without the need to analyze plain check images.

The need for responsible AI is starting to get called out. Companies such as Protopia AI aim to do right by people and businesses by generating value from real data while safeguarding their interests. Protopia AI is the industry’s first software-only solution that enables data protection and privacy for all ML tasks and data types, and has been recognized as a Cool Vendor in AI Governance and Responsible AI by Gartner in 2022. As teams look to innovate and improve with more data, tools such as Protopia AI will become critical to enable safe and effective AI.

With the leaked photos making the rounds on the internet, the issues with data collection and privacy have resurfaced. As AI continues growing, it has never been more important for companies to maintain the utmost transparency in data handling to ensure responsible AI. Companies should not focus on tightening security alone but also explore privacy-centered software to get value out of data without revealing sensitive information.

Explore more use cases on Protopia AI’s solution page

To learn more, get in touch with an expert or try our demo on some images.