Machine Learning inference services are pervasive, underneath many popular applications that consumers rely on every single day. However, the data consumed by these services, much of which is not germane to the direct action at hand, can still be exposed as inference computation is almost always performed on unencrypted data. Herein lies a structural gap in the privacy of data used by AI. This creates an opportunity for data thieves who actively seek to obtain sensitive or private information about businesses, their partners, or their customers.

Protopia AI is the first company to address this privacy gap during inference with a patented mathematical and software-only approach that protects data privacy.

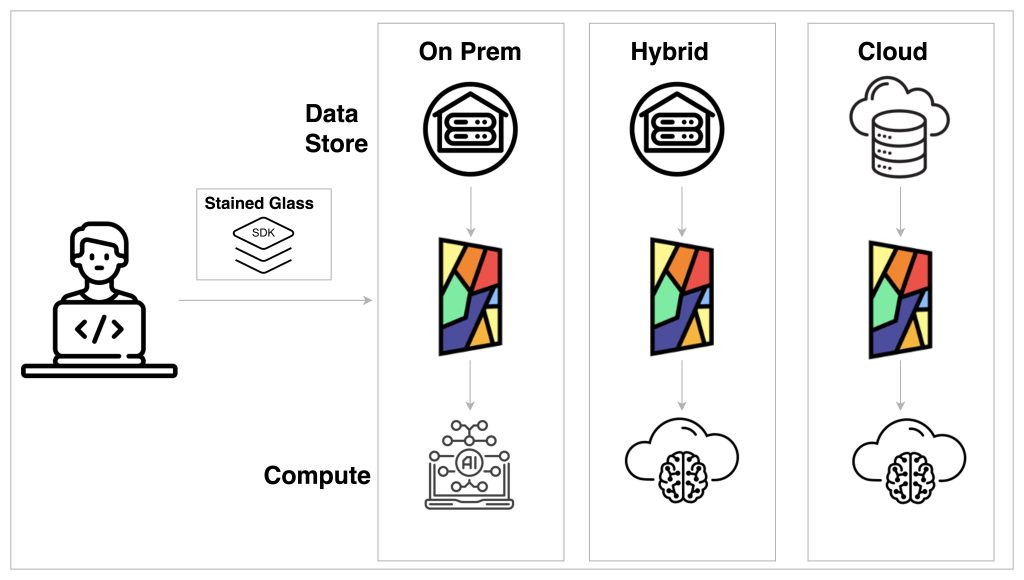

End-to-end Data Protection

Data exists in three states, at rest, in transit, and in compute. An important part of any AI inference service should be the protection of data from threats during the entire process. However, this is not being done with current solutions.

Protecting data at rest can be done through AES on storage systems. Once data starts moving through the network, utilization of TLS secures the data. Where the system breaks down is in the actual processing of the data during inference, where information is turned into action. This is arguably both the most important piece of the process as well as the most vulnerable step in the chain.

Protecting data during inference is critical because the process could expose private information about both external customers and the business providing the inference service itself. For example, consider Machine Learning (ML). inference performed on security video footage of a business. Just as the video may include a customer’s personally identifiable information, it also contains sensitive information about the layout of the company’s buildings or it’s functions. In this example, the risk of exposure encompasses the company, its customers, and potentially even third-party vendors.

The Value and Pervasiveness of AI Inference

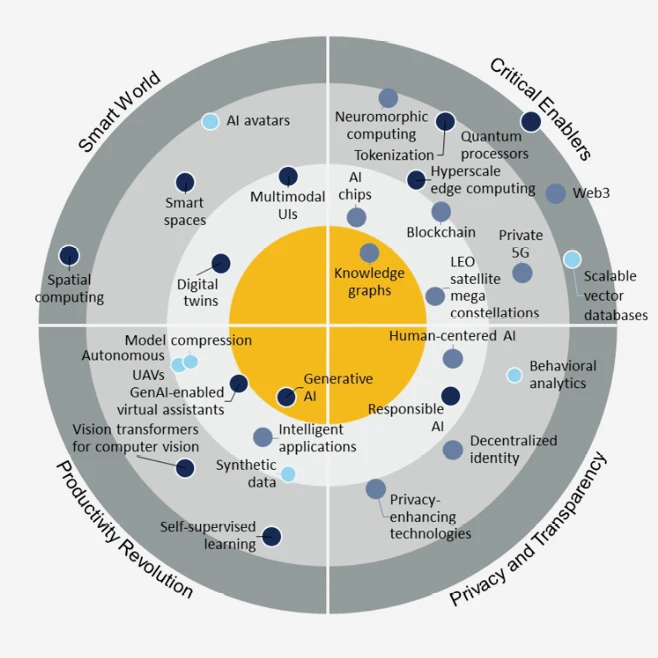

In our always-connected world, we are now fully digitized, and every aspect of modern life is being turned into data that is continuously inferred upon to deliver a variety of life-transforming services. It is estimated that 95% of all data processed for AI is used for inference services.

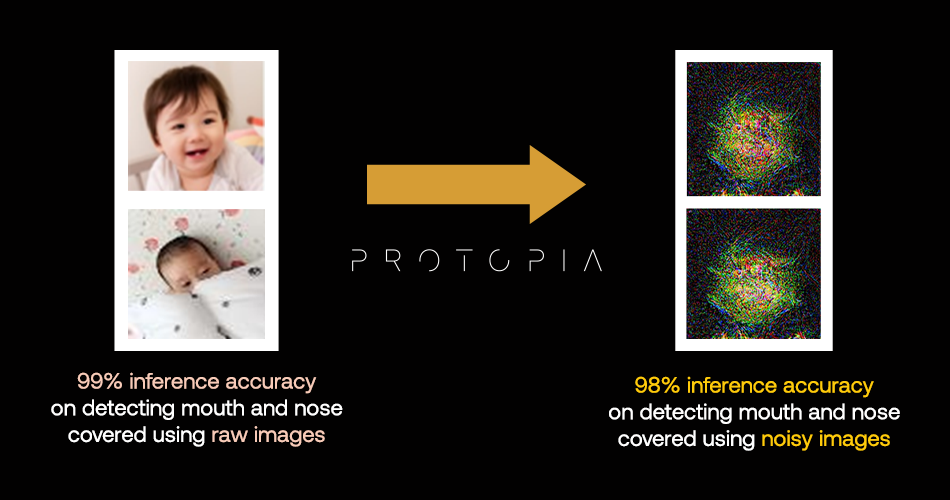

For example, when one asks a home automation system to turn on the lights, the inference service should not be able to deduce if a woman or a man is making the request, how many people are in the room or even what they are conversing about. A baby monitor inferring whether a baby’s face is covered should not be able to determine the baby’s gender, hair color, identity, as well as anything or anyone else in the baby’s vicinity. Such threats have been brought to light numerous times in the press recently as these devices become more integral in our lives.

All of these advances and utilities are transforming our lives for the better, but there is still a downside risk. Because the data records used by inference processes intermingle both actionable inference information as well as private information. Businesses must differentiate between the two and minimize private information exposure wherever possible.

The Challenge of Inference Privacy

Unfortunately, the tools available today that can protect inference privacy, like Fully Homomorphic Encryption and Secure Multiparty Computations, slow down the AI services by orders of magnitude. Users demand instant answers from AI, and with use cases like autonomous driving or healthcare, slow response could be either dangerous or even potentially deadly for the end customers.

While today’s world demands a careful balance of protection and performance, existing technologies leave a business to either lean into the power of AI while putting their business at risk, or take the “safer” path that stifles their innovation and leaves them scrambling behind more aggressive competitors. We’ll never be in a world where data leaks or data abuse won’t be a real business risk, so there needs to be a better way to balance the two seemingly conflicting ideas of using AI and protecting customer privacy.

Introducing Protopia

Protopia AI is the lone non-obtrusive software-only solution for Confidential Inference in the market today. We deliver unparalleled protection for inference services by minimizing exposure of sensitive information. With Protopia, AI is fed only the parts of the data records that are truly essential to carrying out the exact task at hand; nothing more.

Most inference tasks do not need to use all the information that exists in every data record. Regardless of whether your AI is consuming images, voice, video, or even structured tabular data, Protopia delivers only what the inference service needs. Our patented core technology uses a mathematical approach to redact any information that is not conducive to a given service and replaces it with curated noise.

If AI is supposed to fulfill its promise for shortening cycles and driving better insight, then any privacy solution needs to be able to run at the speed of your business, not drag it down. Our software-only solution seamlessly integrates with your existing AI framework without slowing it down. Our technology does not require platform changes or upending the existing inference pipeline. Our noise delivery platform allows you to simply layer in our solution to your existing AI pipelines such that you keep ownership of your data at all times.

Protopia’s solution, although complementary to the existing Confidential Computing initiatives, has stark distinctions. First, our technology is software-only and does not require hardware changes. Second, in Confidential Computing, the data is still processed in its original form and if the hardware is compromised–which is always possible–all the information in each data record is exposed. Protopia redacts the data before compute, minimizing exposure even if the hardware is compromised.

Protopia’s solution minimizes privacy exposure during inference computation regardless of the underlying hardware’s security guarantees by only allowing necessary features from each data record into the compute platform to begin with.

In the following example, when a baby monitor needs to detect whether the mouth and nose are covered, currently the unencrypted pictures on the left are inferred upon. Using the images on the left results in 99% accuracy but 100% of the privacy potentially exposed. With Protopia, the same neural network achieves 98% accuracy using the redacted pictures on the right with 0% privacy exposed.

How Are You Protected?

Many businesses are protecting their data at rest and in transit, but most have put their business at risk without having full protection that extends all the way to the in-compute phase.

As the spotlight intensifies on AI and privacy, scrutiny will increase. Businesses will either lead in the market or will be forced to follow in the footsteps of their competitors who are taking decisive action now.

Taking advantage of Protopia’s seamlessly integrated and unobtrusive solution today gives businesses a major differentiation over their competition and helps build stronger brand equity in an increasingly competitive market.

AI has become an important driver of most business strategies. As inference technology moves forward, we cannot allow privacy to suffer even more. Protecting data needs to lead any AI implementation, not follow.

Protopia – delivering the data required for inference – nothing more.