Architecting for Trust: Building Secure AI Systems

In partnership with Sol Rashidi, former CDAO at Fortune 100 organizations, and best-selling author

This article, the final installment of our three-part series on secure data and impactful AI, outlines key principles and considerations to help you architect data systems and flows for secure and impactful AI.

To recap, Part 1 explored solutions for enterprise leaders to overcome organizational and people challenges that impede AI projects. Part 2 offered insights into new AI-related threat vectors and risks that leaders must contend with. Both articles demonstrated how Protopia AI’s Stained Glass Transform (SGT) solution offers critical capabilities to unblock innovation and time-to-value.

In this article, we’ll cover:

- Why AI systems necessitate new architectural considerations?

- Layers of AI applications – risks and protective measures.

- The security vs availability dilemma of compute infrastructure.

- Unlocking infrastructure availability with SGT.

- Principles for architecting AI systems.

- Cultural considerations for secure and impactful AI.

Why AI systems necessitate new architectural considerations?

As AI adoption explodes across all industries and organizations, data leaders are challenged to unlock AI-critical data flows that generate value but don’t compromise trust and data security.

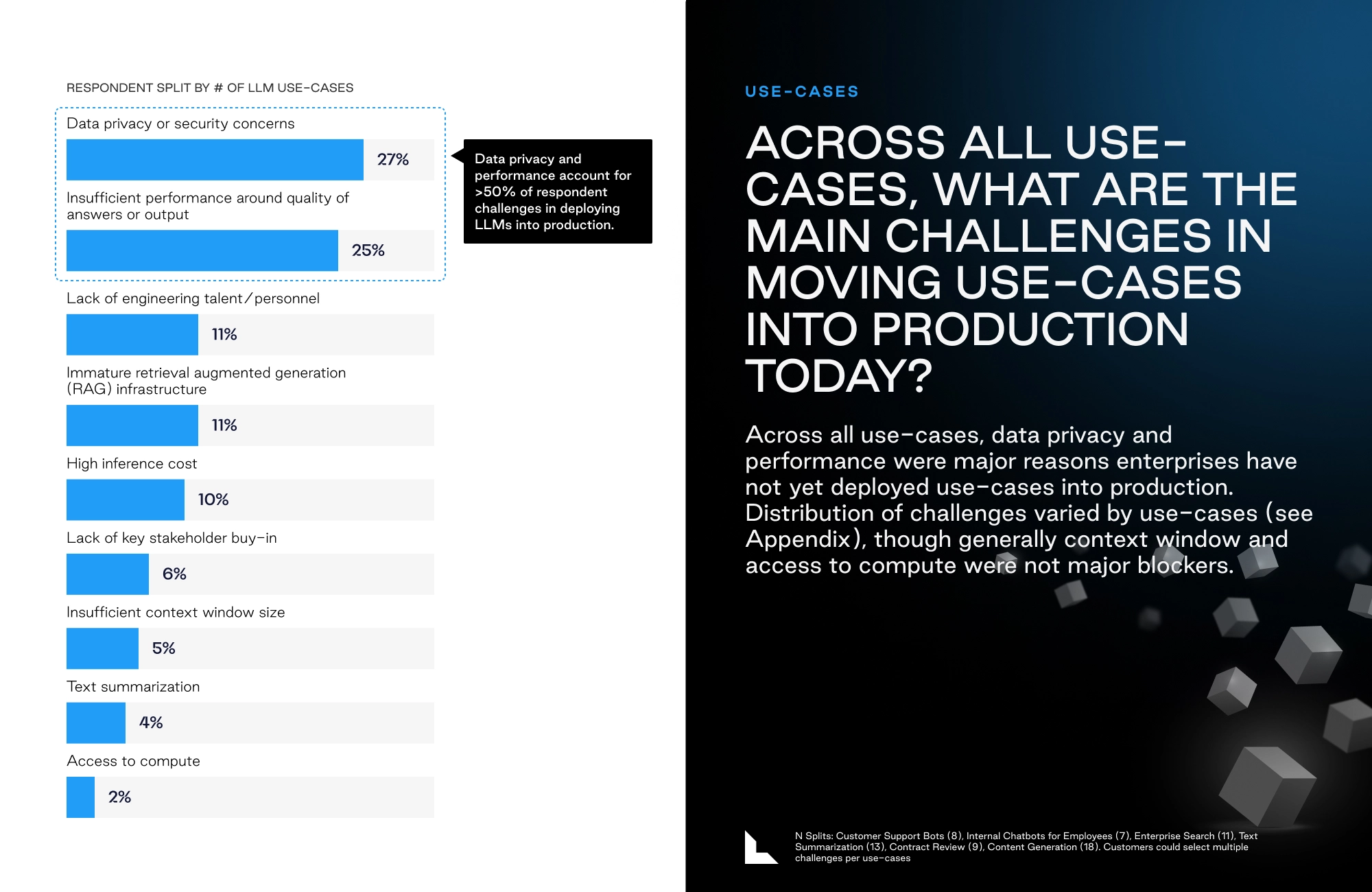

A recent survey by Lightspeed ventures on enterprise AI adoption revealed that leaders rated concerns about data privacy and security as their biggest barrier. More than 50% of respondents noted data privacy concerns and inaccurate, low-quality outputs as the biggest impediments to AI adoption.

Figure 1 – Top challenges for moving generative AI use cases to production, source: Lightspeed Ventures

Leaders express concerns about leakage of sensitive information, and the compounding of cyber security risks as enterprises build and deploy AI systems. To this end, industry standards such as the OWASP top 10 risks for LLM applications, focus on identifying critical security vulnerabilities and mitigation strategies for building systems with AI and Large Language Models (LLMs). The OWASP top 10 list helps organizations prioritize their security investments and plan defense strategies.

Of these risks, many are directly dependent on an organization’s architectural choices for their AI systems:

- LLM06: Sensitive information disclosure when models and LLM applications reveal sensitive information and confidential details, leading to data breaches or information theft.

- LLM02: Insecure output handling when the AI application produces outputs that are not sanitized or validated, leading to outputs that expose backend systems to risks such cross-site scripting (XSS), SQL injection, etc.

- LLM05: Supply chain vulnerabilities where the data or libraries used during the development and fine-tuning of LLMs are compromised leading to potential exploits.

- LLM10: Model Theft where unauthorized parties can access, steal or misuse proprietary LLM models. This is often a consequence of exploiting vulnerabilities (such as weak authentication) in a company’s infrastructure.

AI regulations continue to proliferate rapidly and regulatory compliance is another concern senior leadership must contend with. The EU AI act is now in the process of being implemented while many directives from President Biden’s 2023 executive order on AI have been enacted. More than 40 US states have introduced AI-related bills to enforce standards and protect consumers from potential misuse of AI.

Rigorous access controls, monitoring, security audits and controls as well as data protection techniques are among the most important measures organizations can take to protect their models, sensitive data and consumers from harm.

Figure 2 – Gartner’s prediction on secure AI growth

Traditional “castles and moats” approaches to security, which only focus on network perimeters, are no longer sufficient for AI.

Layers of AI applications – risks and protective measures

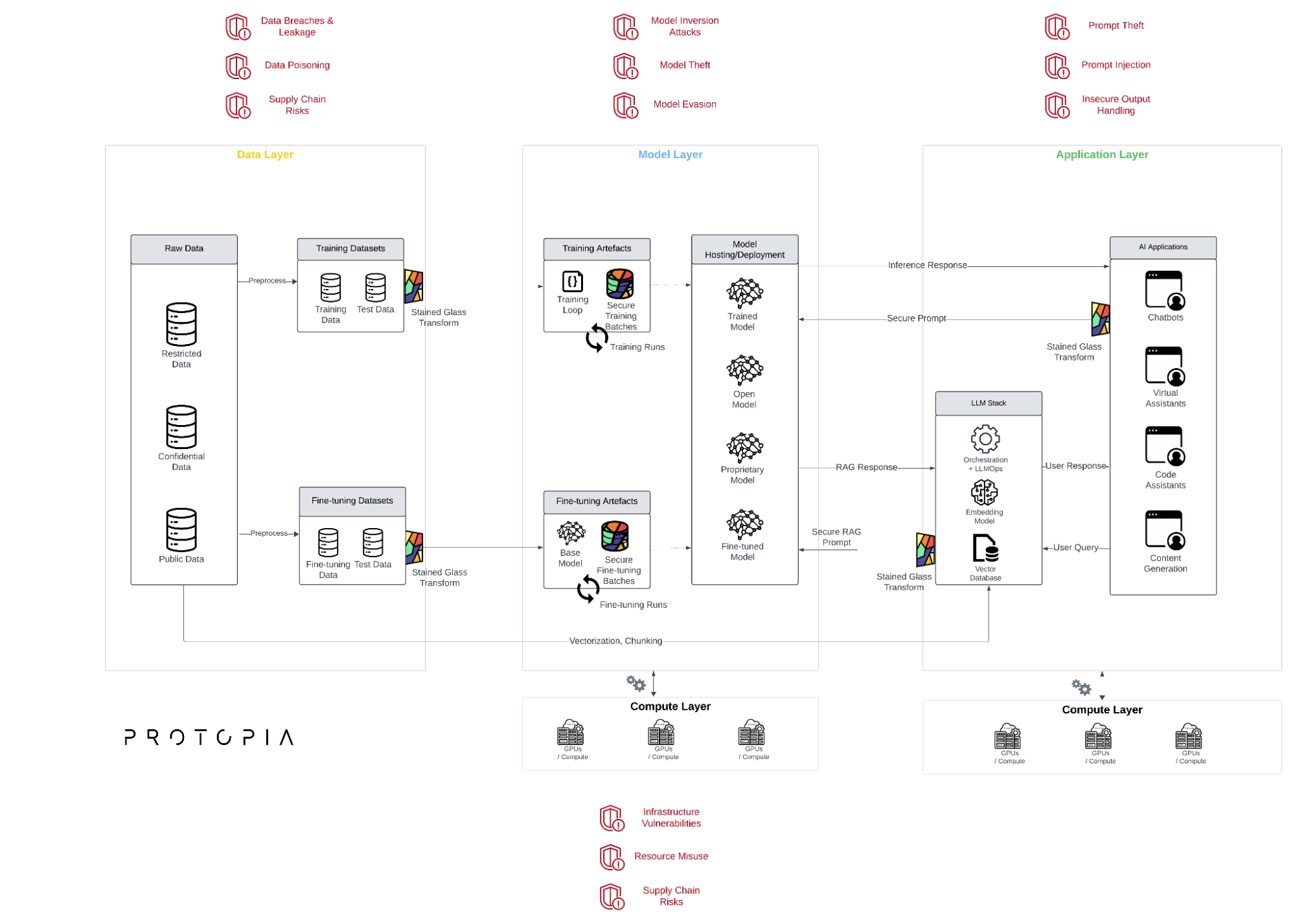

Figure 3 – Risks by layer of AI system

AI and LLM applications span multiple architectural ‘layers’ – data, model, end-user application and compute infrastructure. The layers are covered in depth in our earlier blog. Here is a quick recap of key risks associated with each layer.

| Layer | Key Risks | Recommended Mitigation Strategies |

|---|---|---|

| Data Layer |

Data Breaches and Leakage: Unauthorized access to sensitive data during storage or transmission can lead to significant financial losses, reputational damage, and regulatory penalties.

Data Poisoning: Adversaries can manipulate training data to introduce bias or backdoors

into AI models, compromising their accuracy and reliability.

Data Provenance and Integrity: Ensuring the authenticity and integrity of data used in AI

systems is crucial to prevent the use of tampered or unreliable data.

Supply Chain Risks: Modern AI systems often consume large public datasets, which if

malicious, introduce an additional threat vector organizations must contend with. |

Enforce granular access controls, and establish robust data governance policies. Implement Protopia’s Stained Glass Transform (SGT) for strong protection of data at rest, in transit, and in use scenarios. |

| Model Layer |

Model Inversion Attacks: Attackers can use model outputs and knowledge of the model’s structure to infer sensitive information about the training data.

Model Theft: Valuable AI models can be stolen and used by competitors or malicious actors.

Model Evasion: Adversaries can craft inputs to deceive AI models and bypass security controls. |

Protect model supply chain, secure model training and deployment pipelines, implement strong authentication and access control, utilize monitoring and anomaly detection to identify suspicious activity, and consider using techniques like SGT to enhance protection during model training, fine-tuning, and inference. |

| Application Layer |

Prompt Injection: Malicious prompts can manipulate AI chatbots or other applications to divulge sensitive information, bypass security controls, or perform unauthorized actions.

Insecure Output Handling: AI applications may generate outputs that contain sensitive information or could be used for malicious purposes. |

Validate user inputs, sanitize AI outputs, implement authentication and authorization mechanisms, and incorporate human oversight into critical decision-making processes. SGT protects your prompts and sensitive information against exposure. It complements technologies like guardrails to ensure application security. |

| Compute Layer |

Overallocation of Resources: While some parts of AI workflows consume significant compute resources (for example fine-tuning/training), trying to secure data by over allocating dedicated yet underutilized compute resources can spiral costs out of control.

Infrastructure Vulnerabilities: Compromised compute infrastructure can expose AI systems to data breaches and other attacks. Third party vendors in charge of implementing AI applications often introduce infrastructure security concerns your organization can do little to contain.

Supply Chain Risks: Vulnerabilities in third-party software or hardware used in AI systems can introduce security risks. |

Secure compute infrastructure, hardened operating systems and software components, monitoring and control across the extended actors who may have access to your sensitive data and proprietary models. By minimizing exposure of plain-text data, SGT dramatically reduces the attack surface of your data on your compute infrastructure. |

Table 1 – Risks and mitigation strategies for AI systems

The security vs performance dilemma of compute infrastructure

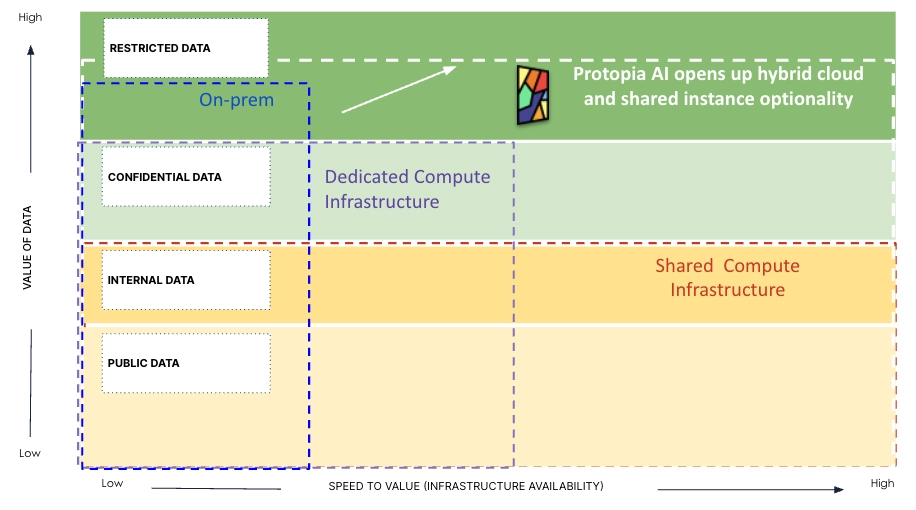

As we noted in the first part of the series, enterprises must methodically classify their data assets, and that the classification and sensitivity of data assets often leads to decisions on what compute infrastructure organizations may choose to run their AI workloads.

Figure 4 – How Classification of Enterprise Data Assets Affects Infrastructure Decisions

Organizations tend to limit AI applications that operate on restricted data to on-premise compute. AI applications that operate on confidential data often are executed within a reserved/dedicated compute environment. AI systems that rely on internal data and public data can be run in shared or public environments due to the cost and scalability advantages of public cloud.

This creates a major conundrum.

On one hand, dedicated hardware and GPUs offer a higher sense of control over who can access systems that contain confidential or restricted data, but at prohibitive costs or at protracted time to value. The ongoing surge in AI application development has led to critical GPU shortages, soaring prices and growing backorders. This widespread demand means even hyperscalers can’t always offer enough dedicated compute availability, leading to costly, long-term (often multi-year) reservation requirements.

On the other hand, while multi-tenant compute for AI workloads from hyperscalers or cloud service providers is cost-effective, performant, and highly available, it is shared by multiple customers of the service provider. Enterprises are wary of placing their restricted or confidential data on shared infrastructure and are restricted to using only non-sensitive data on such environments.

Merely isolating physical compute hardware isn’t adequate to protect sensitive data. A variety of techniques are required to narrow the attack surface. This is illustrated by Apple’s recent launch of their Private Cloud Compute (PCC) system to protect customer information and perform private AI processing. While powerful hardware in the data center can fulfill user requests with large, complex machine learning models, such hardware requires unencrypted access to the user’s request and accompanying personal data. Despite the provider’s best security measures, AI workloads utilizing encrypted data in data centers still carry risks of exposure when breaches inevitably happen. This is due to inherent problems in how data centers operate:

- It is difficult to verify security or privacy guarantees: As one example, even if a service provider claims they don’t log user data, underlying systems like perimeter load balancers or background logging servers may still record thousands of sensitive customer records. Such implementation details make security and privacy guarantees difficult to fully assess or enforce durably.

- Service providers do not offer full transparency into their underlying runtime stack: Providers usually do not disclose the specifics of the software stack they use to operate their services, and these details are often proprietary. Even if a cloud AI service exclusively used open-source software, which can be examined by enterprise buyers, there is currently no widely adopted method for a user device to verify that the service it’s connecting to is running an unaltered version of the claimed software or to detect if the software on the service has been modified.

- It is challenging to enforce strong limits on privileged access in cloud environments: Cloud AI services are intricate and costly to operate at scale, with their performance and operational metrics constantly monitored by site reliability engineers and other administrative staff at the cloud service provider. During outages or critical incidents, these administrators often have access to highly privileged interfaces, such as SSH or remote shell interfaces. While access controls for these privileged, break-glass interfaces may be well-designed, it is extremely difficult to impose enforceable limits when they are actively in use. For instance, a service administrator attempting to back up data from a live server during an outage could accidentally include sensitive user data in the backup. More concerning is the fact that criminals, like ransomware operators, frequently target service administrator credentials to exploit these privileged access interfaces and steal user data.

Thus, even with dedicated compute resources, the challenges to data protection are significant. If you seek high-availability compute at manageable costs, it’s essential to employ multiple strategies to minimize your data’s attack surface, while retaining maximum utility. Protopia AI’s Stained Glass Transform can help.

Unlocking infrastructure availability with SGT

Protopia AI’s Stained Glass Transform can help lower your exposure to data-related threats across all compute environments, whether multi-tenant shared, dedicated cloud, or on-prem.

By transforming sensitive information into secure, stochastic representations, SGT empowers you to safely share your data across distributed compute environments. These representations do not expose your sensitive data in plain-text to unintended actors nor to the underlying infrastructure, thereby improving your security posture and expanding the availability of hardware where you may run your AI workloads.

Principles for architecting AI systems

As AI adoption continues to grow, organizations are starting to align behind several guiding principles to architect their systems:

- Defense in depth: In this approach, multiple layers of security controls are applied to protect AI systems, models and data. Rooted in traditional cybersecurity, the principle has been extended to cover AI-specific risks as well. Implementing defense-in-depth requires narrowing attack surfaces at all levels using multiple security approaches, such as a policy of least privilege, encrypting data at rest and in transit, minimizing data exposure to compute infrastructure, versioning data and models, and more. For a deep dive, please see this blog by Amazon Web Services.

- Zero Trust: In this approach, trust is never assumed, and every access request, whether from a user, application, or system, is rigorously verified. This involves implementing micro-segmentation, strong authentication (including multi-factor authentication), and continuous monitoring to minimize the attack surface.

- Data-Centric Security: Data is treated as the most valuable asset, and security measures are designed to protect data throughout the AI lifecycle – at rest, in transit, and in use – across training, fine-tuning and inferencing. This involves implementing encryption, access controls, data loss prevention (DLP) measures, and robust data governance policies. Access to resources, especially data, should be granted based on authenticated identities and associated permissions.

- Continuous Monitoring and Adaptation: The threat landscape is constantly evolving. It is important to implement continuous monitoring, threat intelligence, and incident response capabilities to quickly detect, analyze, and respond to emerging threats.

With the evolution of AI, these architectural patterns and principles also continue to evolve. So it is best to research these actively and continue to stay abreast of the latest developments and state-of-the-art capabilities.

Cultural considerations for secure and impactful AI

Finally, architecting secure AI systems is as much about people and culture as it is about technology. Here are a few measures to instill a culture of secure and impactful AI in your organization:

- Executive Leadership and Commitment: CDOs, CIOs, and CISOs must champion data privacy and security, setting clear expectations, allocating resources, and fostering a culture of accountability.

- Cross-Functional Collaboration: Security teams, data scientists, AI engineers, legal and compliance teams, and other stakeholders must work together to ensure that AI systems are designed and deployed securely.

- Employee Training and Awareness: Regular security awareness training for all employees is essential to educate them about AI risks, data privacy best practices, and the organization’s security policies.

- Continuous Monitoring and Improvement: AI security is an ongoing process, not a one-time event. Organizations must continuously monitor their systems, assess risks, and adapt their security measures to stay ahead of evolving threats.

- Transparency and Communication: Openly communicating about AI risks, security measures, and data privacy practices builds trust with employees, customers, and other stakeholders.

By fostering a culture of trust and collaboration, organizations can create a more secure and resilient environment for AI innovation.

Conclusion

Architecting for trust in AI systems is a multifaceted endeavor that requires a holistic approach encompassing technology, processes, and people. By embracing modern security principles, navigating architectural choices thoughtfully, making sound infrastructure decisions, understanding data sensitivity, privacy considerations, proactively mitigating risks across the application stack, and staying abreast of emerging technologies, organizations can build AI systems that are impactful, secure and cost-effective for optimal ROI.

Protopia AI’s Stained Glass Transform offers a powerful tool for enhancing data privacy and security, enabling organizations to leverage their most valuable data for AI without compromising confidentiality. By protecting sensitive data and enabling organizations to run their workloads across all types of compute environments, SGT empowers organizations to accelerate AI adoption, improve decision-making, and unlock new possibilities while upholding the highest standards of trust.

To explore how Protopia AI can empower you to build secure and impactful AI systems, contact our team of experts today.

About the Contributor

We are grateful to Sol Rashidi, former CDO, CAO, and CAIO of Fortune 100 companies, such as Estee Lauder, Merck, Sony Music, and Royal Caribbean. Sol holds eight patents and has received numerous accolades, including ‘Top 100 AI People’ 2023, ‘Top 75 Innovators of 2023,’ and ‘Forbes AI Maverick of the 21st Century’ 2022. She has also been recognized as one of the ‘Top 10 Global Women in AI & Data’ 2023, ’50 Most Powerful Women in Tech’ 2022, and appeared on the ‘Global 100 Power List’ from 2021 to 2023 and ‘Top 100 Women in Business’ 2022.