Risks in AI systems and mitigation strategies

In partnership with Sol Rashidi, former CDAO at Fortune 100 organizations, and best-selling author

An introduction to AI Risks

Welcome back to our three-part series designed by and for leaders in data, information technology, and AI. In the first installment, we tackled the critical issue of overcoming barriers to data accessibility, exploring strategies to unlock the full potential of your data assets while ensuring compliance and security.

In this second article, we shift our focus to the mission critical theme of preventing AI risks. We will explore notable risks to your data in AI systems, discuss less understood risks that public clouds create for AI workloads, elaborate on emerging industry standards for risk, and introduce some tactics to safeguard your organization and data.

Executives are discussing these risks at length, along with a variety of AI security frameworks to mitigate them; however the lack of depth, breadth, and span of experience is hindering the ability of CXO’s to progress at a speed that aligns with where the innovation in AI is taking us.

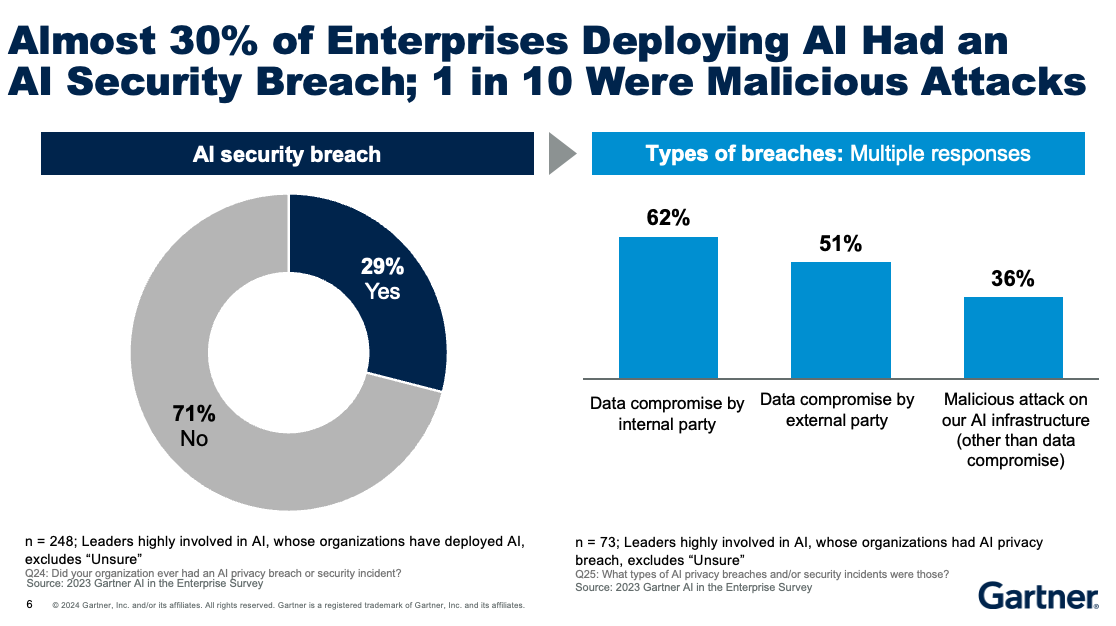

Figure 1 – 30% of enterprises deploying AI had a security breach, Gartner

The threats are real. Large Language Models rely on vast amounts of data for training and fine-tuning, amplifying the risk of data misuse, exposure and leakage. Generative AI has expanded the attack surface companies must contend with. Public clouds, often the only path to procuring scarce GPUs, make traditional encryption based approaches inadequate for securing data. And the sophistication threat actors have gained, thanks in part to LLMs, has fueled a surge in cybersecurity incidents. These risks often have catastrophic effects on the brand value and financial health of impacted firms.

For example, compromises in data security and privacy of AI systems caused breaches at major LLM providers. In the ChatGPT data leak in March 2023, a bug caused the chatbot to expose customer data, including credit card information. Artificial intelligence startup Anthropic, the company behind the ChatGPT rival Claude, had a data leak where a contractor sent an email containing non-sensitive customer information to an unauthorized third party.

Hallucinations, where AI systems generate false/fabricated responses, puts brand reputations and consumer safety at risk. Air Canada was found financially liable by the Canadian courts for its chatbot’s statements, while Google lost $100 billion in market value due to a factual error made by its chatbot Bard. Ensuring the quality and accuracy of input data is one of the main ways in which organizations limit hallucinations. To minimize hallucinations, leaders must explore approaches to making their most sensitive data accessible to AI while protecting it against a multitude of risks.

At the same time, there is increasing pressure to comply with a growing body of regulation around data security, privacy and governance within AI systems. Notable regulations – such as the EU AI Act, which imposes up to a 7% of global revenue penalty for non-compliance, and President Biden’s Executive Order on AI – mean that data privacy and confidentiality are critical design concerns for modern AI systems.

Key AI Risks

As we all know, data security is a critical concern when deploying AI systems, particularly when handling sensitive and/or personal information. Organizations are maniacally focused on protecting data from unauthorized access, ensuring integrity, and safeguarding personal or sensitive information used to train the foundational models. As noted earlier, AI systems increase the attack surface significantly.

With that said, not all risks are created equal, and not all ‘data’ risks are ‘AI’ risks. A variety of AI risks have been listed below, and we’ve provided both definitions and consequences that drive the discussions in AI risk today.

- Data Breaches & Leakage – Exposure of sensitive data can harm customers, disrupt operations, and lead to legal consequences. Attackers might try to detect if an individual’s data was used for training (membership inference attack) or deduce sensitive information from model outputs (attribute inference attack).

- Supply Chain Risks – AI heavily relies on open-source datasets, models, and tools, introducing vulnerabilities that can extend to production components. Attacks could tamper with model functionalities (model subversion) or introduce compromised datasets (tainted dataset injection).

- Data Poisoning – Data poisoning is the deliberate manipulation of training data to corrupt the output and behavior of machine learning models. Threat actors can manipulate how AI systems behave by injecting tainted datasets into training data. The resulting AI outputs can be biased or highly inaccurate. Additionally, tainted data may also open up backdoors into AI systems for vulnerabilities that can be exploited. Inaccurate predictions not only compromise decision-making, but can lead to severe reputational risks.

- Feature Manipulation – Feature manipulation is the alteration of input features to deceive or mislead a machine learning model’s predictions. When it occurs, it can lead to incorrect outputs, flawed decision making, and potentially severe operational and financial consequences for the enterprise. While infrequent, there are bad players lurking so having the right access protocols is key in avoiding this risk.

- Hallucinations & Fakes – Hallucinations and fake data in AI refer to the generation of incorrect or misleading information by a model. When this happens, it can lead to erroneous conclusions, damage trust, and result in poor decision-making and financial losses for the enterprise

- Backdoor Trojan Horse Model – It’s a malicious model embedded with hidden functionalities that allows unauthorized access or control by a user.. When it occurs, it can lead to security breaches, data theft, and significant operational and financial damage for the enterprise.

- Lack of Compliance – Lack of compliance is the enterprises failure to adhere to regulatory and industry standards. While uncommon, companies can delay the protocols needed to be compliant. When it occurs, it can result in hefty fines, legal penalties, and reputational damage for the enterprise

However, of all the risks discussed, Data Breaches & Leakage seem to be top of mind and the most popular risk discussed. Organizations don’t just have their most sensitive, mission-critical data and secrets stolen for malicious purposes; they also have to bear legal and reputational consequences plus PR nightmares. Therefore organizations are discussing all the possible risks associated with it, the data governance policies and supporting privacy-enhancing technologies in order to protect personal data, corporate data, and proprietary data.

As an example …

“On April 12, 2024, Cyberhaven detected 6,352 attempts to paste corporate data into ChatGPT for every 100,000 employees of its customers.”

Source: ITBrew

To make the situation real, below are a few noteworthy examples of data leakage incidents related to AI projects:

- A major health insurance company faced a data breach where sensitive patient data was leaked due to insufficient data protection during AI model training. The breach resulted in substantial financial penalties and loss of customer trust.

- Similarly, a financial institution experienced sensitive data theft when adversaries accessed proprietary trading algorithms and client data, leading to significant financial losses and a damaged reputation.

- A leading e-commerce company suffered from prompt theft, where valuable customer interaction data was extracted by competitors, undermining the company’s competitive advantage.

As a result, measures such as encryption, masking, stochastic transformations, access controls, and continuous monitoring are being discussed and deployed to safeguard against unwanted exposure and with Data Leakage. It is important to note that all these techniques are complementary, and no single measure eliminates the threat surface completely. We already noted that standard techniques like masking/tokenization and encryption are inadequate for the dramatically increased threat surface that AI operations create.

AI systems are compute-hungry, and public clouds are often the most cost-effective, scalable and performant environment to access the resources required. However, third-party cloud services introduce a multitude of less known, but equally critical, risks. Powerful machine learning models require unencrypted access to user inputs plus data so end-to-end encryption isn’t feasible. When sensitive data resides on cloud deployments, traditional security guarantees and contracts do not address all concerns.

- First, security guarantees in public clouds are hard to enforce as logging services can inadvertently record and expose your data.

- Second, cloud systems are notoriously opaque about the underlying software components that power their services and these change fluidly. Vulnerabilities in underlying software may compromise your data.

- Finally, many individuals, like site reliability engineers or administrators, have privileged access to cloud systems to ensure reliability and uptime. This significantly expands the threat of malicious actors stealing credentials to compromise your sensitive information.

AI Risk & Security Frameworks

While we’re all aware of the risks, what continues to be discussed are the effective frameworks that can be deployed to help organizations manage the risks associated with AI.

A few noteworthy frameworks include:

- TRiSM: Gartner created the Trust, Risk, and Security Management Framework. Its focus is on Trust (transparency and ethical AI practices), Risk Management, Security, Compliance, Governance, and Monitoring to address the challenges associated with the development and deployment of AI systems. This includes risks related to:

- Bias and discrimination

- Privacy violations and data breaches

- Lack of transparency and explainability

- Adversarial attacks and security vulnerabilities

- Regulatory and compliance issues

The framework advocates for conducting thorough risk assessments and impact analyses before deploying AI systems, especially in high-risk domains like finance, healthcare, and law enforcement.

- OWASP: The Open Web Application Security Project (OWASP) is also a comprehensive guide for securing AI systems, emphasizing the importance of protecting data at every stage of the AI lifecycle. Specifically, their stages include: Data Collection, Data Processing, Model Training, Model Evaluation, Model Deployment, Monitoring and Maintenance. It was created to have a security-focus across all AI models and environments. OWASP emphasizes data protection, model security, and ongoing monitoring.

- NIST: The National Institute of Standards and Technology (NIST) offers a risk management framework that focuses on ensuring the reliability, security, and privacy of AI systems. This framework discusses AI security as it relates to Data Governance, Data Mapping, Measuring, Managing, and Monitoring. The NIST is suitable for organizations seeking a comprehensive risk management framework that can be applied across different AI models and environments. Whereas OWASP is ideal for organizations looking for a security-focused framework that addresses specific vulnerabilities and threats throughout the AI lifecycle across models and environments.

- MITRE ATT&CK: is a comprehensive knowledge base of adversary tactics and techniques observed in real-world cyber attacks. It provides a detailed matrix that helps organizations identify and mitigate potential threats to AI systems. By understanding common attacker behaviors, organizations can enhance their threat detection, prevention, and response strategies, making it a valuable tool for protecting AI environments from sophisticated cyber threats.

Importance of Data Classification

Regardless of where you are in the AI lifecycle and which framework suits your organization best, one thing is clear – no AI Security & Risk Framework will be effective or deployable until you’ve gone through a data classification exercise. Data classification is the first and most critical step in managing AI risks.

Why? In order to understand what data you need to govern, how the data needs to be governed, and the level of rigor in which it needs to be governed, you need to go through and categorize your data domains, and the data sets within them on its level of sensitivity and the impact of any exposure. Effective data classification enables organizations to prioritize protection efforts, ensuring that the most sensitive data receives the highest level of security versus assuming all data requires the highest level of security which has cost implications; it is the 1st step, and a foundational step, in the life cycle of AI mitigation procedures.

We shared a framework for classifying your data assets in the first part of the series.

Whether it’s the Chief Data Officers (CDOs), Chief Information Officer, Chief Information Security Officer (CISO), and/or all three, the CXO’s have accountability to classify the data since it plays a pivotal role in comprehensive risk mitigation and knowing the stringency of protection measures.

As such, to enable Data Classification so that your AI risk framework efforts are effective, the following foundational steps are essential before deploying your Framework. The steps include:

- Identifying your Data Sources

- Identifying the Data Inventory in the sources

- Identifying the Data Owners for the inventory

- Classify the data across the varying levels (public, internal, sensitive, confidential)

- Publish your Data Classification decisions as your internal policy for data access.

This will operate as your manifesto for all data access, security, and governance decisions and will be the foundational level for designing your AI Risk & Security Framework.

How Protopia Helps

Protopia AI’s proprietary Stained Glass Transform (SGT) technology was created to safeguard your most valuable, private data and rapidly build AI innovation. SGT converts the input data and prompts that organizations absolutely do not wish exposed when running their AI into randomized representations. These are useless for human interpretation but retain the full utility of the underlying data for AI systems. This mitigates your AI security risks in a few ways:

| Data Leakage, Sensitive Information Theft | |

| Challenge | Protopia’s Solution |

| AI initiatives lack access to the most valuable enterprise data due to security and privacy concerns, hindering potential breakthroughs. | SGT creates safe representations of your organizational and your enterprise customers’ most valuable data to maximize the quality of data available for Generative AI while ensuring that no raw data leaves its root of trust. |

| Sensitive information movements from new data workflows and processes, driven by the increased demand of AI increases exposure risk to malicious actors and compromising your security posture. | Sensitive data is transformed into randomized representations, unlocking AI/LLM utility without exposing or leaking original data. |

| Hallucinations and poor model accuracy | |

| Challenge | Protopia’s Solution |

| Hallucinations erode credibility and trust in AI rollouts | SGT unlocks safe access to the most valuable enterprise data so you can improve the quality of your AI predictions using better inputs and reduce hallucinations. |

| Redacted or synthetic data lowers model accuracy, hampering AI initiatives. | SGT lets you fine-tune or train models with randomized representations without sacrificing accuracy. |

| Efficacy hindered by limited access to data in hybrid execution environments, which are necessary due to GPU/compute constraints or data sovereignty requirements. | SGT enables data availability across hybrid environments through directly usable, irreversible transformations, eliminating the need to transmit or locally copy actual data outside your trusted environment. |

| Prompt Leakage | |

| Challenge | Protopia’s Solution |

| Inference traffic interception risks exposing sensitive or personally identifiable information (PII) to hackers. | Enhances the security of prompts to model endpoints, ensuring consistent protection of sensitive user data. |

| Malicious actors can compromise data, user, or model security by stealing prompts to extract sensitive details. | Strengthens the protection of prompts and sensitive context data in Retrieval Augmented Generation (RAG) systems for enterprises. |

You can learn more about the typical AI application stack and how Protopia supports every layer in this article.

Conclusion

As AI technologies continue to advance and integrate into business operations, the risks associated with data privacy, security, and integrity become increasingly significant. Executives must understand these risks and implement robust frameworks to mitigate them. Data classification is the foundational step in this process, enabling organizations to prioritize protection efforts and safeguard their most sensitive data. AI risk frameworks such as OWASP, NIST, MITRE ATT&CK, and Gartner TRiSM help organizations devise the right strategies, while techniques like Protopia’s Stained Glass Transform significantly curtail the attack surface beyond common approaches.

In the next blog – Part 3: Architecting for Trust: Building Systems for Privacy-Preserving AI – we’ll see how leading organizations architect their data systems and flows for secure, privacy-preserving AI. We’ll also indicate approaches to balance security vs performance by comparing security enclaves with distributed environments. And we will continue with future deep dives into popular privacy enhancing techniques including masking/tokenization, and trusted execution environments. Subscribe to our blog to receive the content in your inbox.

To enhance the security of your data flows for AI and ensure optionally, please contact our team of experts.

About the Contributor

We are grateful to Sol Rashidi, former CDO, CAO, and CAIO of Fortune 100 companies, such as Estee Lauder, Merck, Sony Music, and Royal Caribbean. Sol holds eight patents and has received numerous accolades, including ‘Top 100 AI People’ 2023, ‘Top 75 Innovators of 2023,’ and ‘Forbes AI Maverick of the 21st Century’ 2022. She has also been recognized as one of the ‘Top 10 Global Women in AI & Data’ 2023, ’50 Most Powerful Women in Tech’ 2022, and appeared on the ‘Global 100 Power List’ from 2021 to 2023 and ‘Top 100 Women in Business’ 2022.